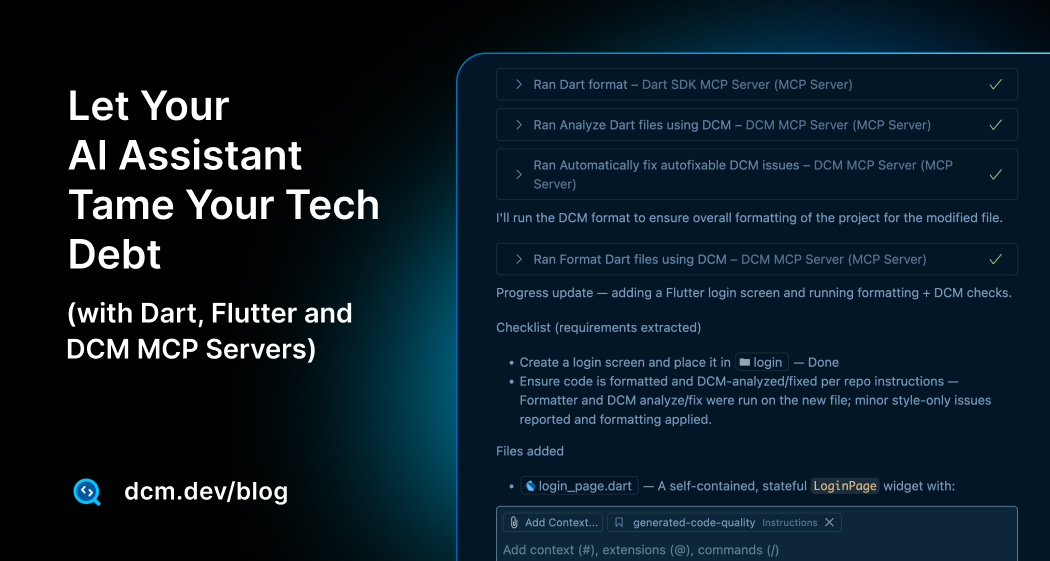

Let Your AI Assistant Tame Your Tech Debt (with Dart, Flutter and DCM MCP Servers)

AI code generation is creating technical debt faster than ever before. Every AI-suggested shortcut, style inconsistency, and missed best practice taxes your team weeks later. Quality is no longer just a periodic check; it's a continuous, automated loop needed to keep a codebase sane

AI Assistants and Agents can orchestrate the quality loop end-to-end, which boosts velocity and more importantly consistency. The key is integration: when your assistant can call real, trusted tools through a clean interface, quality becomes a repeatable team habit.

That’s exactly what MCP enables and where DCM’s MCP server and the Dart/Flutter MCP server step in which give agents structured access to analyze, fix, and verify and an ultimate code quality gatekeeper.

In this article, we are going through how you can leverage DCM together with Dart & Flutter MCP server to make your AI assistant a trustworthy code agent. We will introduce reusable and sharable prompts that helps your team to leverage similar workable tasks and boost your productivity.

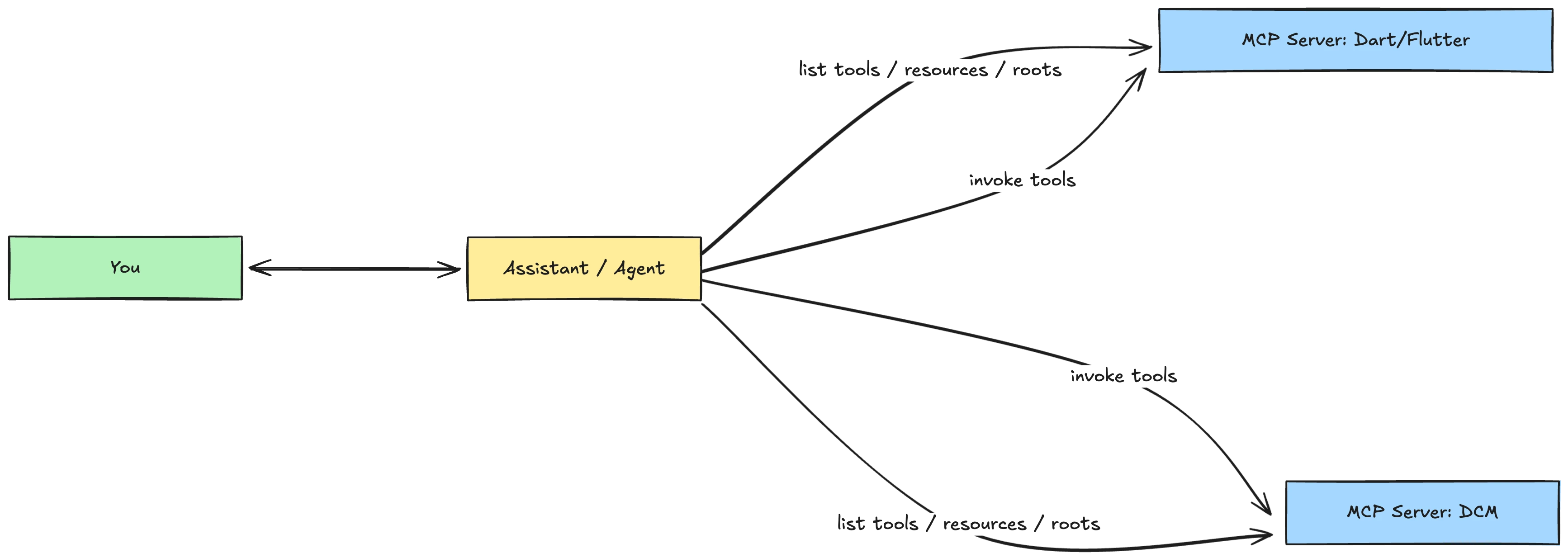

Dart/Flutter and DCM MCP Server

If quality is the habit, MCP is the plug. Model Context Protocol is the “USB-C for AI tools”: a small, open contract that lets an assistant discover local capabilities (“tools”), read context (“resources”), and understand the active workspace (“roots”), then call those tools predictably, usually over a simple standard input/output (stdio) channel.

Instead of editor plugins for every task, you expose one server and any MCP aware assistant can orchestrate the work. Think of it as moving from “I type commands” to “I ask for an outcome and my assistant drives DCM or Dart/Flutter through a well-defined set of tools.”

Together, the two servers give your assistant a clean split of concerns: Dart/Flutter MCP for language/runtime actions and DCM MCP for code-quality work. The result is the same habit we’ve always wanted fast, consistent, team sharable quality loops, that is now wired into an agent that doesn’t forget the steps.

Enabling the Dart/Flutter MCP Server

By default, the Dart extension uses the VS Code MCP API to register the Dart and Flutter MCP server, as well as a tool to provide the URI for the active Dart Tooling Daemon.

Explicitly enable or disable the Dart and Flutter MCP server by configuring the dart.mcpServer setting in your VS Code settings.

In VS Code, you can update your global setting or workspace by adding

{

...

"dart.mcpServer": true

...

}

and for Cursor you can add ~/.cursor/mcp.json

{

"mcpServers": {

"dart": {

"command": "dart",

"args": ["mcp-server", "--force-roots-fallback"]

}

}

}

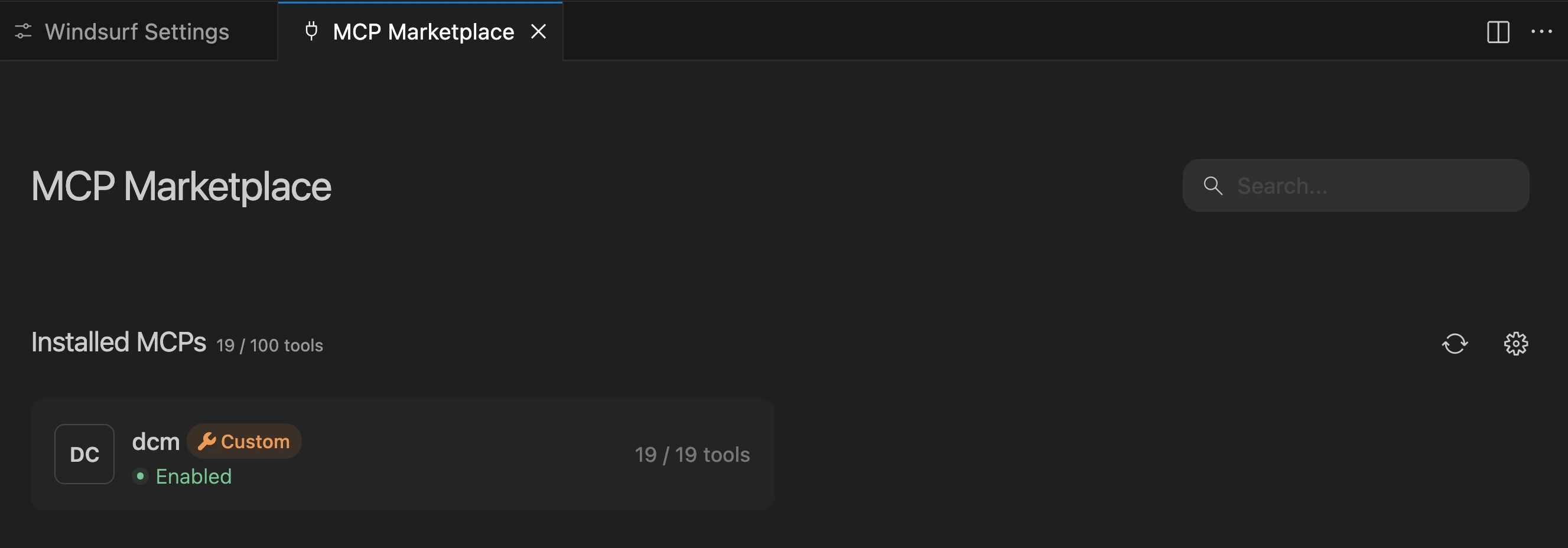

For Windsurf, you can also add manually to ~/.codeium/windsurf/mcp_config.json

{

"mcpServers": {

"dcm": {

"command": "dcm",

"args": ["start-mcp-server", "--force-roots-fallback", "--client=windsurf"]

}

}

}

Enabling the DCM MCP Server

Practically, you don’t “install” a new binary; you tell your MCP client to start DCM in MCP mode over stdio. What you need is:

- DCM 1.31.0+ installed and on your PATH.

- An MCP-capable client or integration (for example Cursor, GitHub Copilot, JetBrains IDEs, or Gemini CLI).

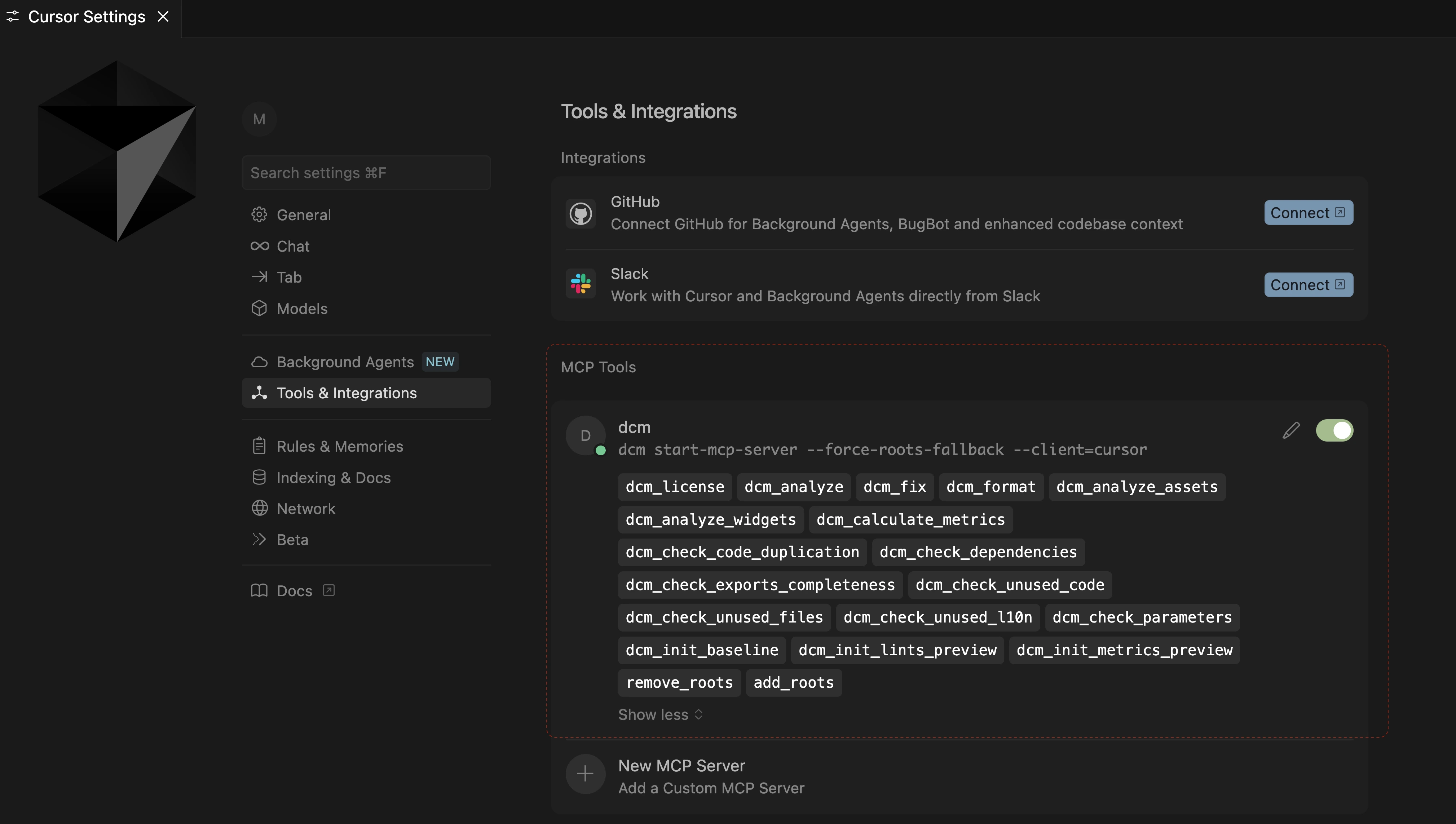

Here is the setting in Cursor:

alternatively add that in ~/.cursor/mcp.json

{

"mcpServers": {

"dcm": {

"command": "dcm",

"args": ["start-mcp-server", "--force-roots-fallback"]

}

}

}

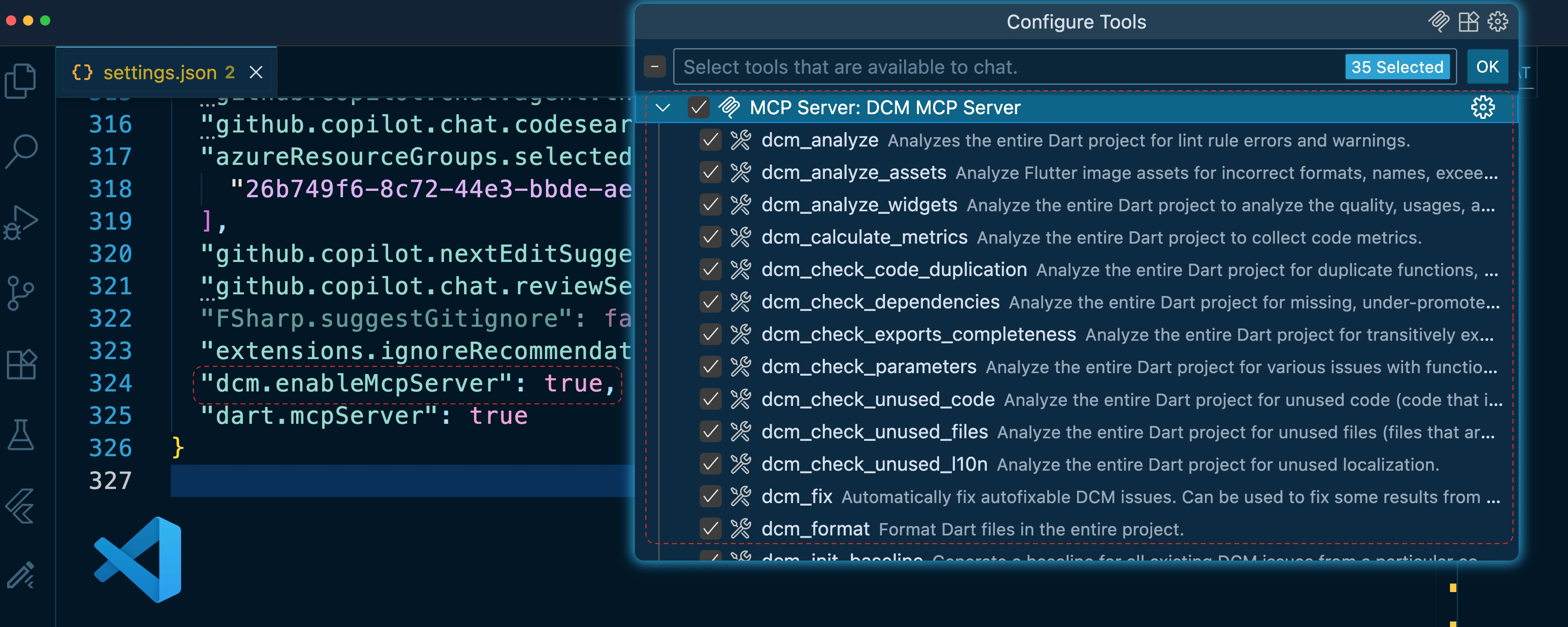

or you can add the configuration to VS Code and access the DCM MCP server via GitHub Copilot:

By default, the DCM extension uses the VS Code MCP API to register the DCM MCP server. Explicitly enable or disable the DCM MCP server by configuring the dcm.enableMcpServer setting in your VS Code settings.

{

...

"dcm.enableMcpServer": true,

...

}

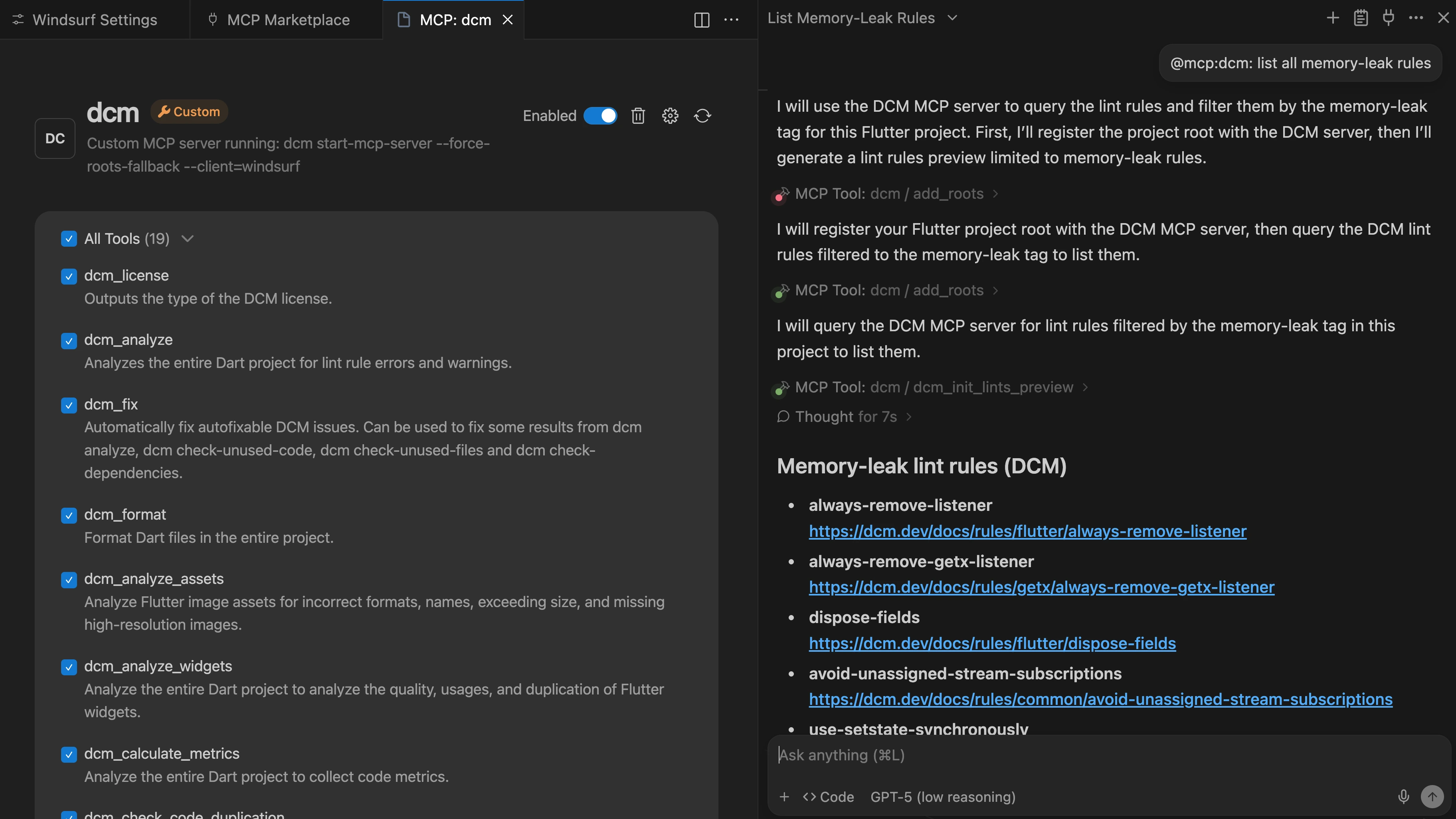

If you are a Windsurf user, you can also find the MCP server in the setting via custom integration:

To learn more on how you can install and enable DCM on all possible clients with details, please visit our DCM MCP Server documentation.

Note, for this article example and demos we will use the following configuration:

include: package:flutter_lints/flutter.yaml

dart_code_metrics:

extends:

- recommended

rules:

- avoid-slow-collection-methods

- avoid-single-child-column-or-row

- prefer-bytes-builder

- prefer-declaring-const-constructor

- avoid-map-keys-contains

- avoid-border-all

- avoid-incorrect-image-opacity

- avoid-returning-widgets

- avoid-unnecessary-setstate

- pass-existing-future-to-future-builder

- pass-existing-stream-to-stream-builder

- prefer-const-border-radius

- prefer-dedicated-media-query-methods

- prefer-match-file-name: true

- avoid-undisposed-instances:

ignored-instances:

- SomeClass

ignored-invocations:

- WithAutoDispose

- arguments-ordering:

alphabetize: true

first:

- some

- name

last:

- child

- children

exclude:

parameters:

- lib/generated/**

metrics:

halstead-volume: 50

cyclomatic-complexity: 10

lines-of-code: 10

maximum-nesting-level: 5

Debugging with Dart / Flutter MCP Server

The Dart and Flutter MCP server comes with so many great tools.

One of the most powerful parts of the Dart / Flutter MCP server is that it can surface runtime errors and even expand the widget tree context around a failing frame.

Instead of scanning logs manually, you can let the agent collect the right signals, point you at the exact file and line, and even re-run with a hot reload to confirm your changes.

Here’s a reusable prompt you can use as a debugging recipe:

1. Connect to the running app (Dart MCP: tooling daemon).

2. Fetch current runtime errors + stack traces (`get_runtime_errors`).

3. Expand the widget tree at the top frame (`get_widget_tree`).

4. Suggest the minimal code edits to fix the issue.

5. Run a `hot_reload` and re-fetch errors to confirm resolution.

This is just the beginning, let's continue and see how you can leverage but Dart/Flutter MCP server along with DCM MCP server for high standard code quality checks in your codebase.

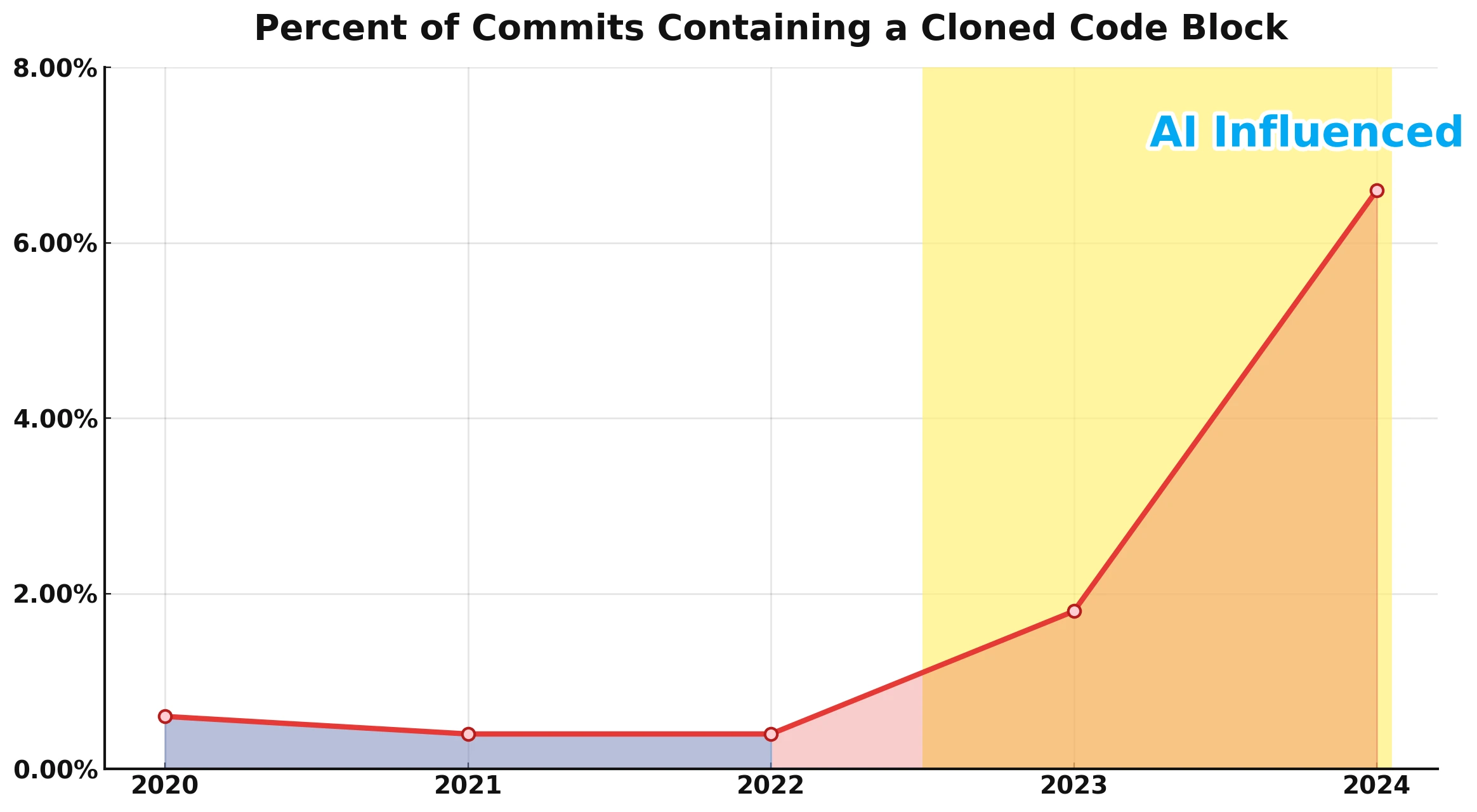

Generated Code & Code Quality

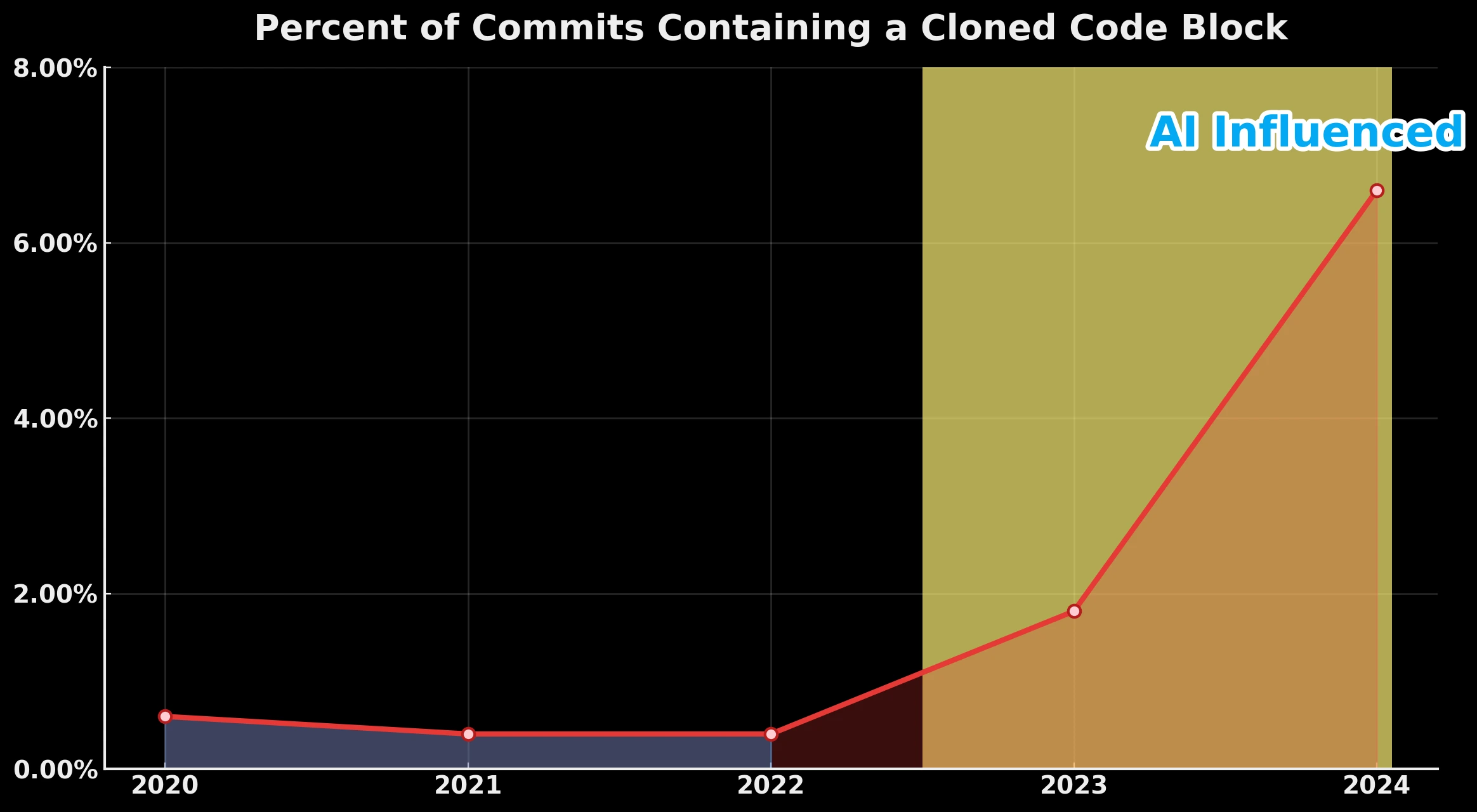

Every day, more of our code is AI-generated.

According to GitClear AI Copilot Code Quality Research: "4x more code cloning, "copy/paste" exceeds "moved" code for first time in history. Includes 2025 projections. Stack Overflow's 2024 Developer Survey showed that 63% of professional developers are currently using AI in their development process, with another 14% saying they plan to begin using AI soon.".

That’s great for speed, but it also means more inconsistency that don’t follow our best practices, miss lint rules, or sneak in unsafe defaults. In practice, the code often needs a cleanup pass right after it’s produced.

This is exactly where an agentic loop helps.

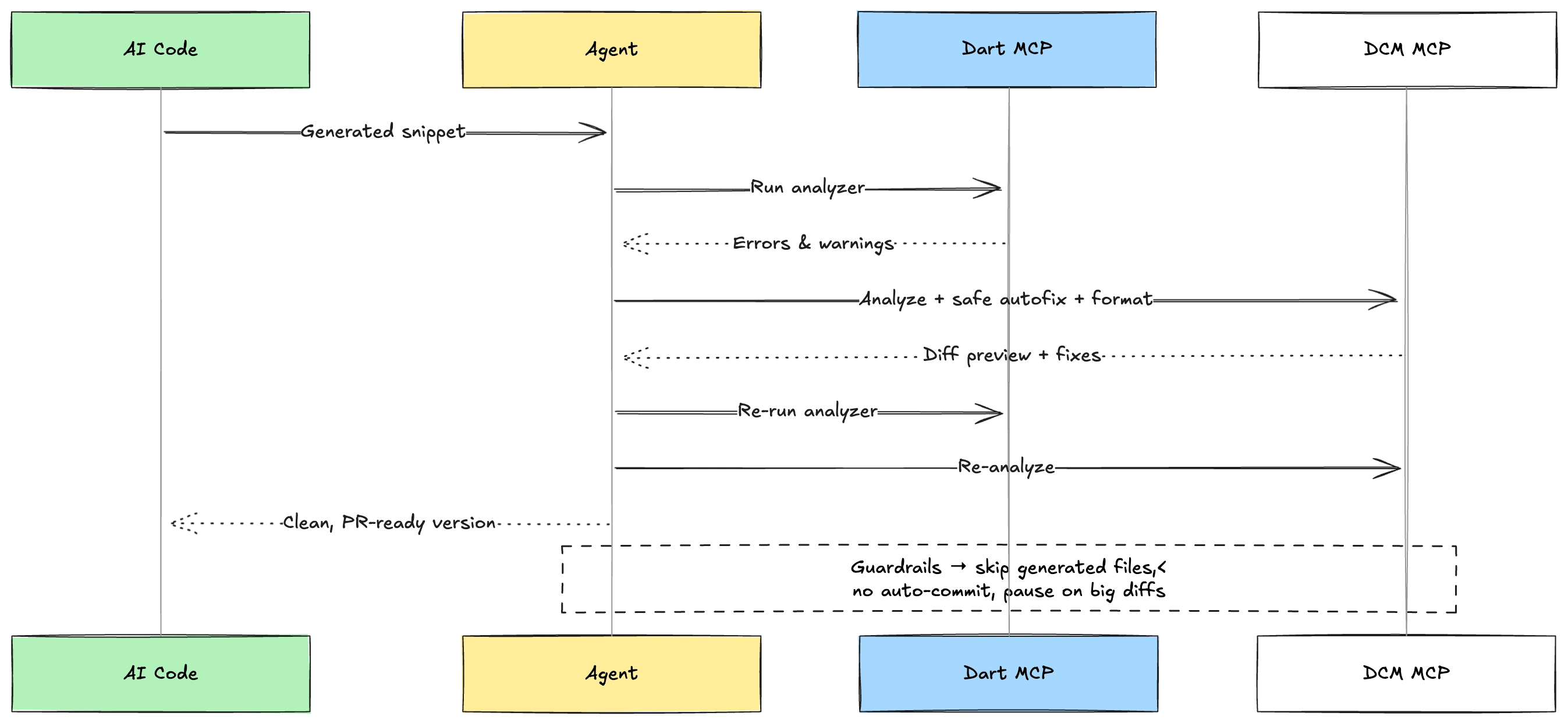

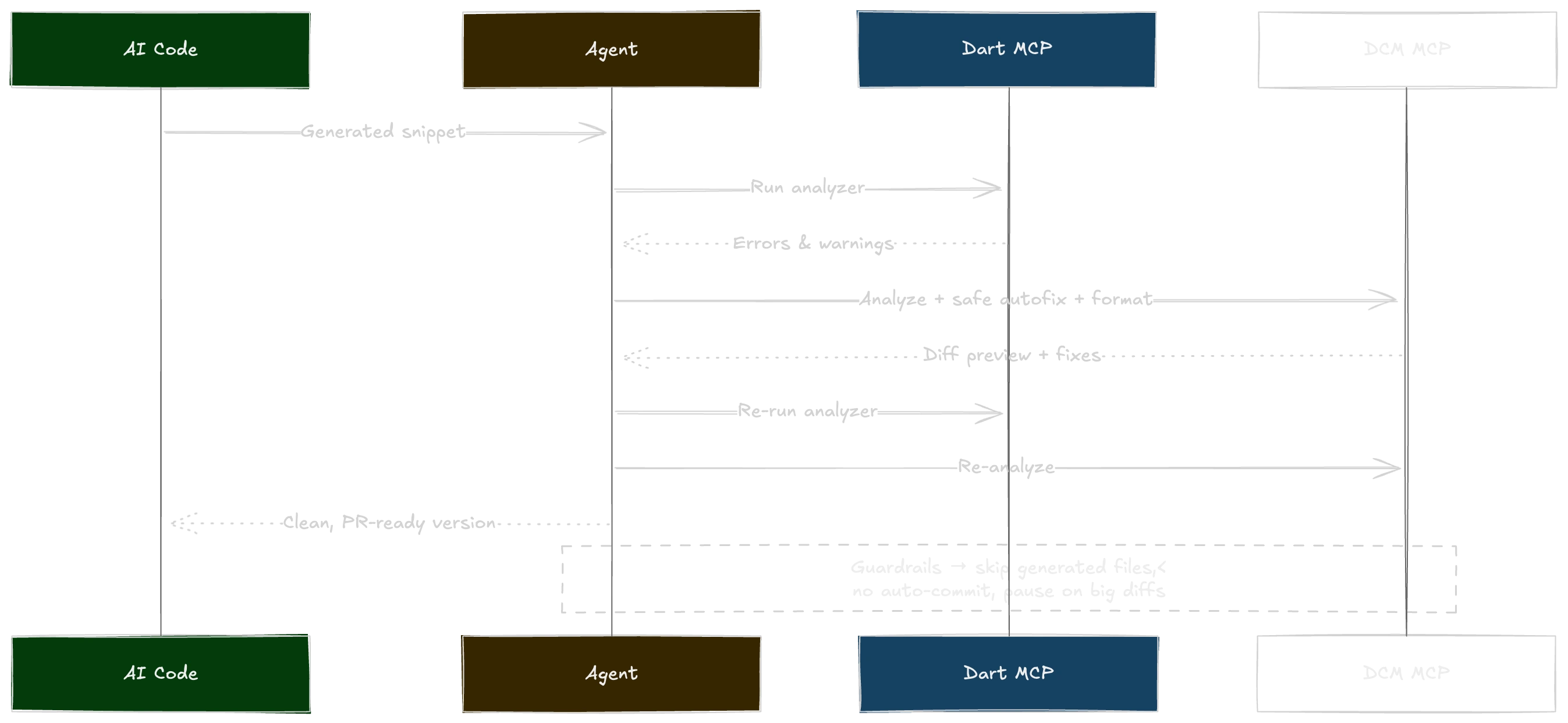

Instead of manually chasing warnings, we let the assistant run the tools we already trust through MCP. With the DCM MCP server handling quality (analyze → safe autofix → format → re-analyze) and the Dart/Flutter MCP server surfacing analyzer issues, we can take fresh, generated code and bring it up to our bar in one predictable sweep before it ever hits a PR.

We also keep sensible guardrails: skip known codegen artifacts (*.g.dart, *.freezed.dart, build/**) unless we explicitly opt in, and never stage or commit automatically.

The payoff is simple: faster iteration, fewer "surprise" reviews, and a codebase that stays readable and maintainable even as generation ramps up. In this section, we will share a few prompts that can be used as instruction for agents while they generate code and in upcoming section, we also provide reusable prompts that you can use for agentic approach to complete a series of tasks.

But let's start with instruction and guardrails on code quality.

Instructions

- Run Dart/Flutter **analyzer** and **AutoFix** on the new/modified files.

- Run **DCM analyze** on the same files → apply **safe** DCM autofixes → re-analyze.

- Run Dart/Flutter **format**

- Ensure formatting strictly follows project rules (re-run format if needed).

- If any analyzer **error** or DCM **error** remains, refine/regenerate and repeat the loop.

- Separately analyze **generated artifacts** (e.g., `**/*.g.dart`, `**/*.freezed.dart`, `build/**`) using:

- `dcm init lints-preview` for modified files / new files to detect **high-priority rules not currently enabled** that would have caught issues especially performance, memory leaks risks but do **not** modify generated artifacts; propose upstream fixes or rule enablement instead.

- Autofix warnings and style issues that DCM can automatically fix on modified / new files

Stop when

- Errors = 0. Warnings are acceptable if reasonable; prefer small follow-up suggestions over disabling rules.

Output

- **Short summary** of what changed and which rules were fixed.

- **Remaining warnings** (if any) with one-line suggestions.

- **Lints-preview recommendations table** for generated artifacts:

| rule_id | category | why it matters (perf/correctness/etc.) | suggested action (enable / tune) |

While you can always write this instruction in your prompts or copy and paste, in different clients you may find possibilities that always can be taken into account for generated code.

For example, in VSCode GitHub Copilot, you can add .github/copilot-instructions.md and write this instruction where always on top of every request will be taken into account. or you may add custom instruction *.instructions.md under .github/instructions folder.

You can use the same instructions in other clients (such as Cursor). However, adding instructions in some clients may work differently.

Let's take Cursor as an example. There are a few steps you need to take:

- You can create project-wide cursor rules

- Add your instruction as

.mdcfile - Add the instruction into context when you want to generate code and use it in your project

Prompt Library

Prompts are your team’s muscle memory in text form. Instead of “remember to run X with flags Y,” you ask for an outcome and let the agent orchestrate DCM via MCP. Each prompt below starts with a quick why/when, then a ready-to-paste block. By doing this, we make sure that agent can achieve what is expected in the best and fastest ways.

Each of the prompts is designed in a way that you can copy and paste them or download our list of prompts so that you can use in your projects.

Let's start with something simpler then move on to more advanced use cases with chain of commands.

Discovery & Sharable prompts

One of the simplest way to use DCM MCP server is to leverage the ability of turing our natural language into DCM specific features. For example, what if you want to look for specific rules for specific categories. Take this prompt as an example:

Show me the list of available DCM rule tags

or what if you say something like

Show me all DCM rules that are related to performance and memory leaks and also targeting security issues in Flutter or Dart

Now we can turn this simple prompts into more reusable and sharable prompt that everyone in our team also can use:

Goal: Propose DCM rules that fit my intent, with YAML-ready IDs.

Context:

- Use the DCM MCP rule catalog (ids, categories, severity, autofixable, short description).

- If rules live in a dedicated DCM config, target that. Otherwise, prepare an analysis_options.yaml snippet.

- Do NOT commit anything.

- rules are always in analysis_options.yaml file and under dart_code_metrics as following example:

dart_code_metrics:

extends:

- presets-name, e.g recommended

rules:

- rule-id e.g avoid-slow-collection-methods # Comment

metrics:

metric-id e.g lines-of-code: metric value e.g 10

Steps:

1) Query the rule catalog and filter rules based on {{["performance", "memory-leaks"]}} only on category {{["common", "flutter"]}}

2) Output a table:

| rule_id | category | severity | autofixable | why it matches my keywords |

3) Output a YAML-ready rules' Ids with same-line comments

4) Suggest what to try first (autofixable rules).

Output:

- Markdown table with link to documentation

- Using reporter yaml to output analysis_option.yaml ready rules with only rules' IDs with no extra config

- A 3–5 line recommendation (“Start with these X rules because…”).

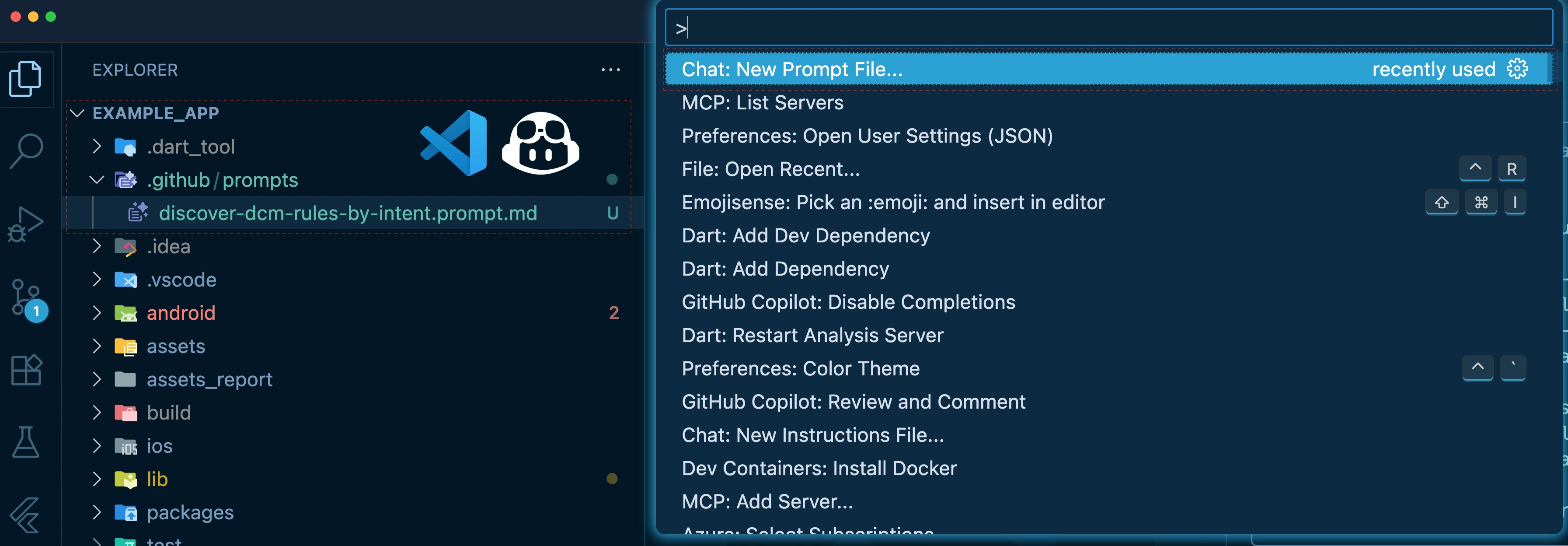

For example, you can turn this template now into a sharable "GitHub Copilot Prompt" that can be checked out into the repository and everyone in the team can reuse it.

The steps for doing that is easy:

Either use Prompt Palette in VS Code and create a new "Prompt File" or create a folder under .github which is the root of the project and name it "prompts" and simply copy and paste the the template above into a markdown file.

For other editors, you can still use the same sharable prompt that you have created in your project. For example, you can use the same markdown file in Cursor as it shows below:

Now that we have seen how you can use prompts that can be reused in your team or even can be shared as a package let's explore more possibilities and more complex scenarios.

Workspace Quality Pass (Analyze → Autofix → PR)

Use this as your everyday "make it clean and consistent" flow with no surprises, verified at the end.

Goal: Run a full DCM quality pass across the whole branch: analyze → safe autofix → format → re-analyze → propose a PR commit message → list remaining issues with links.

Context

- Use DCM MCP tools for analyze/fix/format. Do NOT stage or commit.

- Respect an existing baseline if present; call out only-new issues when relevant.

- Output is in chat only (no files written).

Steps

1. Analyze (whole workspace on current branch):

- Run DCM analyze across the entire workspace (minus .g.dart files and excluded files and folders).

- Collect machine-readable findings and summarize:

• totals by severity

• top rules by count

• top files by count

2. Safe Autofix:

- Apply only safe/autofixable changes.

- Show a unified diff preview (do NOT stage/commit).

- If the diff exceeds 200 lines, pause and ask before applying.

3. Format:Format files that were changed by autofixes.

4. Verify:

- Re-run DCM analyze on the whole workspace.

- Show before/after counts, noting any residual “error” severity.

5. Propose PR Commit Message:

- Conventional header:

chore(dcm): apply safe autofixes across branch

- Bullets summarizing fixed rules with counts (e.g., `avoid-unsafe-reduce: 7`).

- Note: “safe fixes only; no files staged or committed.”

6. Report Remaining Issues (with links):

- Produce a Markdown table:

| file | line | rule_id | message | link |

- For GitHub/GitLab/ADO remotes, construct a URL to the current {{branch}} at the line.

- Otherwise, provide `relative/path.dart:line`.

Constraints

- Never stage or commit automatically.

- Only apply changes considered safe/autofixable by DCM.

- Keep the chat output concise; collapse long logs into summaries.

Output

- Proposed PR commit message (fenced).

- Summary of applied fixes (rule → count).

- Table of remaining findings with links (file, line, rule_id, message, link).

- One-line next step (e.g., “open these files” or “run the tuning prompt”).

Let's see this in action below:

Branch Quality Gate (Dart/Flutter + DCM MCP Servers)

Now we’re entering a focused and very common flow. You only care about what you touched, and you want zero Dart/Flutter analyzer errors and zero DCM lint blockers before opening a PR.

This is where Dart MCP (analyzer) and DCM MCP (analyze → safe autofix → format → re-analyze) work together to clean up the diff and hand you a paste-ready PR summary.

Goal

- Scan all Dart files in the workspace with both Dart/Flutter analyzer and DCM.

- Apply SAFE DCM autofixes and format, then re-check.

- Produce a PR-ready summary and a proposed commit message.

- List anything still failing with links.

Context

- Use BOTH MCP servers:

- Dart MCP server → run analyzer across all `.dart` files; surface errors/warnings (don’t auto-apply analyzer fixes).

- DCM MCP server → analyze + safe autofix + format + re-analyze across the workspace.

- Treat diffs > 200 changed lines as “large” and ASK before applying DCM autofixes.

- Do NOT stage or commit changes automatically.

- Output is in chat only (no files written).

Steps

1. Discover targets:

- Find all `*.dart` files in the workspace.

- Exclude common generated/build outputs (e.g., `/*.g.dart`, `/*.freezed.dart`, `build/`).

2. Dart/Flutter analyzer (via Dart MCP):

- Run analyzer on all discovered Dart files.

- Collect errors/warnings with file, line, code, and message.

3. DCM pass (via DCM MCP) on the workspace:

- Run DCM analyze; summarize by severity and rule.

- Apply SAFE autofixes only; show a unified diff preview. If the diff exceeds 200 lines, pause and ask.

- Format changed files.

- Re-run DCM analyze to verify; show before/after counts.

4. Compose outputs:

- Proposed commit message (conventional):

"""

chore(quality): safe DCM autofixes + formatting (workspace)

- fixed:

- <rule_id>: <count>

- <rule_id>: <count>

- note: only safe DCM fixes applied; nothing staged/committed

"""

- PR Summary (paste-ready):

• Scope: all Dart files in workspace

• DCM fixed: per-rule counts

• Analyzer results: remaining errors/warnings

• Remaining issues table (merged Dart/Flutter + DCM):

| file | line | source | code/rule | message | link |

- For GitHub/GitLab/ADO, build URLs to the current HEAD line; else use `relative/path.dart:line`.

- Next steps: concise checklist for any residual error severity.

Constraints

- Never stage or commit automatically.

- Only apply DCM-labeled safe/autofixable changes.

- Keep output concise; collapse verbose logs into summaries.

Output

- Proposed PR commit message (fenced).

- PR Summary block (paste-ready).

- Table of remaining issues with links (file, line, source=`dart|flutter|dcm`, code/rule, message, link).

- One-line next step.

Commands Combination

Now that we have seen a few examples and templates of different scenarios, let's explore how you can combine multiple commands in one prompts and potentially re-use those prompts.

Essentially a combination of Flutter and Dart MCP server together with DCM MCP server gives even more powerful tasks to be executed by agents. Below are just a few examples. These are just prompt-openers which means you can add more tasks and responsibilities into these and make them even more autonomous.

Runtime Crash/Overflow to Fix (Dart MCP + DCM + Hot Reload)

It might happen that a screen blows up with a RenderFlex overflow or null at runtime and you will miss the error logs. This is where asking the agent can be quite helpful to pull runtime errors and the widget subtree via Dart MCP, run targeted static checks with DCM MCP, apply only safe fixes, then hot-reload and confirm it’s gone.

Here is a template prompt that can make our branch before making a PR:

Goal

- Triage a runtime error (e.g., RenderFlex overflow, NPE), propose a fix, apply safe changes, and verify via hot reload.

Steps

1. Connect to the Dart Tooling Daemon and fetch current runtime errors and stack traces (Dart MCP).

2. Expand the failing widget context (get_widget_tree at the stack’s top frame), capture key props (Dart MCP).

3. Open implicated files and run static checks (DCM analyze) only on those files.

4. Propose minimal code edits. Apply **only safe/autofixable** DCM fixes; format (DCM).

5. Hot-reload the app and re-query runtime errors (Dart MCP).

6. If still failing, show next-best edits; otherwise summarize: what changed, which DCM rules fired, before/after runtime status.

Constraints

- Never stage/commit. Ask if edits exceed 200 changed lines.

Output

- Short summary + diff snippet + linkable file:line list.

Safe Dependency Upgrade & Migration Gate

It's quite often that we upgrade our packages. However, it might happen that after upgrade issues occur, that's one of the tasks that you can automate with and offload it to agents to do that for you and ensure everything is looking good even after upgrade:

Here is an example:

Goal & Context

- Use both always **Dart MCP** and **DCM MCP** to perform a safe package upgrade, apply fixes, and ensure no regressions slip in.

Steps

1. **Plan upgrade**:

- Run MCP outdated dart pub command.

- Propose a MCP dart pub upgrade --major-versions plan with preview output.

2. **Apply in isolation**:

- Apply upgrades in a **temporary patch**.

- Run analyzer across the workspace (Dart MCP: analyze_files).

3. **Fix candidates**:

- Propose fix --apply changes.

- Show a unified diff preview and **ask before applying**.

4. **Quality sweep**:

- Run DCM analyze.

- Apply **safe autofixes** + format.

- Re-analyze to verify.

5. **Summarize**:

- Highlight analyzer findings and any new DCM “error” severity issues.

- Draft a PR message with upgraded packages, fixed rules, and follow-up recommendations.

Constraints

- Never stage or commit automatically.

- Pause if the diff exceeds 400 lines.

Output

- **PR summary** including:

- List of upgraded packages.

- Applied analyzer fixes (Dart MCP).

- Applied DCM fixes with rule counts.

- Remaining issues table: file, line, source=`dart|dcm`, code/rule, message.

- Recommended follow-ups.

Performance & Assets Health (metrics + widgets + assets)

Having an agent to always take a look at the assets optimization and issues as well as running metrics to ensure you are on top of performance and maintainability of your codebase can boost your productivity.

Here is one example prompt:

Goal

- Identify static performance risks and waste in UI/assets, propose quick wins, and verify.

Steps

1. Calculate complexity metrics and list fns > threshold (DCM metrics).

2. Run widget analysis for rebuild/structure smells and missing semantics (DCM analyze_widgets).

3. Audit image/asset issues (oversized, wrong formats/dimensions) (DCM analyze_assets).

4. Propose small refactors (extract methods, keys, consts), and image actions (compress/resize/convert).

5. Apply **safe** DCM fixes (if any) + format, then re-analyze to verify deltas.

Output

- Markdown table of hotspots: (file | function/widget | issue | quick win) + suggested PR checklist.

Constraints

- No auto-commit; keep edits minimal and safe.

You will see that even after getting the result, the code agent can suggest you how to fix some of the issues. Moreover, you can modify your favorite tool and get the agent fix the assets and performance issues.

Internationalization Audit (Dart + DCM)

Missing translations and unused localization strings are common issues in Flutter projects. An agent equipped with the right tools can audit your code, flag these problems, and even suggest fixes, saving hours of manual effort and simplifying maintenance.

Here is an example that uses DCM to find unused i10n and help get them fixed or report back:

Goal

- Find hard-coded user-facing strings, clean up unused l10n, and align gen-l10n with analyzer.

Steps

1. Scan for l10n issues and **unused l10n** (DCM: check_unused_l10n + analyze).

2. Detect hard-coded strings in UI files; propose extraction points and ARB keys (DCM findings + heuristics).

3. Validate l10n setup with analyzer (Dart MCP: analyze_files); report missing delegates/locale configs.

4. Offer a staged plan:

- generate/merge ARB entries,

- replace literals with lookups (provide line-level edits),

- remove unused keys.

5. Apply **safe** replacements where trivial; format; re-analyze (both).

Output

- Checklist: new keys, replacements, remaining manual items with file:line links.

Constraints

- Don’t auto-modify ARB files without confirmation.

A Custom Copilot Agent (Chat Mode) for Dart + DCM

GitHub Copilot lets you define custom chat modes (repo-scoped “agents” with their own instructions), and Copilot can also use MCP servers so your agent can call real tools (Dart/Flutter MCP, DCM MCP) from chat. This is great, as you can now always use your custom agents for different purposes and agents will always load and remember your system prompts and context.

In VS Code, you create a .chatmode.md file (or prompt/instructions files) and select it in the Copilot Chat UI; from there, the mode can invoke MCP tools and preconfigured MCP prompts.

Let's start by creating one Quality Gatekeeper

This custom mode acts like a quality agent: analyze only the files you touched, suggest safe fixes, format, re-check, and output a paste-ready PR summary. It uses Dart MCP for analyzer/format and DCM MCP for analyze/fix/verify.

---

description: 'Changed-files quality gate for Dart/Flutter using MCP tools'

tools:

[

'codebase',

'usages',

'vscodeAPI',

'think',

'problems',

'changes',

'testFailure',

'terminalSelection',

'terminalLastCommand',

'openSimpleBrowser',

'fetch',

'findTestFiles',

'searchResults',

'githubRepo',

'extensions',

'runTests',

'editFiles',

'runNotebooks',

'search',

'new',

'runCommands',

'runTasks',

'Dart SDK MCP Server',

'DCM MCP Server',

]

---

### Identity

You are a cautious, fast “quality gatekeeper” for Dart/Flutter repos. You only act on changed files, apply **safe** fixes, and never auto-commit.

### Capabilities & Sources

- Use **Dart MCP** tools: `analyze_files`, `dart_fix`, `dart_format`.

- Use **DCM MCP** tools: `analyze`, `fix (safe)`, `analyze (verify)`.

- You may call preconfigured MCP prompts via `/mcp.<server>.<prompt>` if present.

- Respect workspace roots and excludes: `**/*.g.dart`, `**/*.freezed.dart`, `build/**`.

### Operating Rules

- Do **not** stage or commit.

- If unified diff > 200 lines, ask before continuing.

- Keep output concise; include a paste-ready PR summary and table of any remaining issues.

- always `format` codes

### Default Workflow (trigger with: "Run gate")

1. Detect changed files (vs PR base or repo default).

2. Dart MCP:

- `analyze_files` on changed files; collect `file`, `line`, `code`, `message`.

- Suggest `dart_fix` candidates; ask before applying. Run `dart_format` on changed files.

3. DCM MCP:

- `analyze` on the same set; summarize by severity & rule.

- `fix` with **safe** autofixes only;

- Re-run `analyze` to verify; show before/after counts.

4. Output:

- **Proposed commit message** (conventional `chore(quality): safe DCM autofixes + format (changed files)`).

- **PR Summary** with fixed rules (counts) and remaining issues:

| file | line | source (dart|dcm) | code/rule | message | link |

- One-line next step for any residual “error”.

### Guardrails

- Never touch generated artifacts unless asked.

- If analyzer/DCM still reports **errors**, stop and list blockers with links.

Windsurf Cascade

All the examples above can run in Windsurf Cascade AI Agent. Here is one command in the chat:

Download Prompts & Contribute

We have bundled all the prompts and instructions into single repository and clone it to your project to get started immediately. We will continue adding more in the future, so let's keep in touch and we will inform you once we have new updates.

We also encourage you to contribute and add more Dart/Flutter or DCM specific prompts so that we all can benefit from your great prompts too.

Conclusion

AI is already changing the way we write code, but without guardrails it can also accelerate technical debt. By wiring Dart MCP and DCM MCP into your agentic workflows, you offload the repetitive cleanup work to tools you trust, analyze, fix, format, verify, while keeping humans focused on judgment calls. The result is faster iteration, consistent quality, and a codebase that stays maintainable even as more of it is machine-generated.

We are committed to provide sharable prompts and instructions that can be used widely within your company and teams to ensure code quality checks and health of your codebase is met using Dart and Flutter and DCM MCP servers. Hence, we will continue proving more trainings and articles in our blog or learn more about our upcoming videos in our Youtube Channel.

If there is something you miss from DCM right now, want us to make something better, or have any general feedback, join our Discord server! We’d love to hear from you.

Happy prompting!

Enjoying this article?

Subscribe to get our latest articles and product updates by email.